In order to design reliable and safe AI functions for the long term, a practical methodology for creating approval-relevant safety arguments is being developed, which will be tested and implemented in different use cases.

Approach

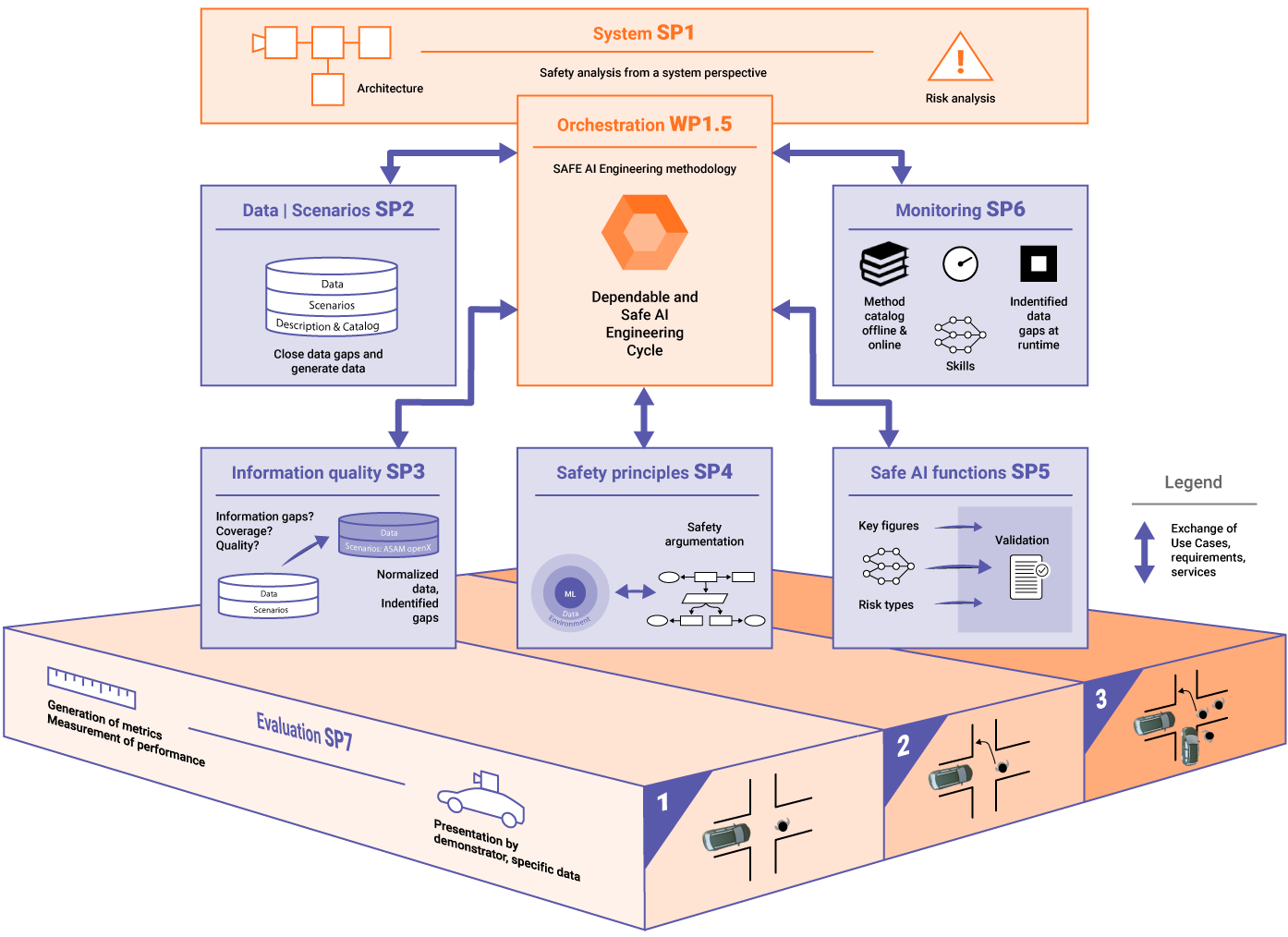

The methodology is developed using a camera-based AI perception function for pedestrian detection. It goes through three iterative stages of functional evolution, each involving a use case with increasing complexity. The AI function for pedestrian detection is further developed and tested using the use cases. Each of these use cases integrates new challenges and thus requires continuous improvement and validation of the AI function. The collaboration and interconnection of the individual subprojects results in a comprehensive, approval-relevant safety case. The approach to systematizing this interconnection (or orchestration) is the central goal of the project—the Safe AI Engineering Methodology.

Project Structure

SP1 focuses on the systematic orchestration of an AI engineering methodology across the entire life cycle of an AI-based perception function. This also describes the system requirements for AI properties, their limitations, and thus also the limitations that the safety argumentation must cover. A control concept ensures that relevant artifacts are made available, integrated, and validated consistently across subprojects.

1 of 7In SP2, a consistent, adaptive, and scalable database is created by further developing existing tools, scenarios, and data sources. This database provides building blocks for safety arguments, specifically closes data gaps, makes hybrid data usable, and systematically supports data provision throughout the entire life cycle of safety-critical AI-based functions.

2 of 7In SP3, concepts for the efficient use of the data generated in SP2 for training and validating AI systems are developed. Information theory-based methods for normalizing and evaluating information quality ensure that sensor data can be processed in a robust, abstracted, and safety-relevant manner.

3 of 7The core of SP4 is the development of a hybrid safety concept based on a needs analysis of safety-related principles in accordance with ISO PAS 8800, ISO 26262, and SOTIF, integrating data-driven, causal-analytical, and monitor-based approaches. Together with other SPs, this enables a consistent, practical safety argumentation across system, data, and function modules.

4 of 7SP5 is developing a safe, explainable, and robust camera-based perception function for pedestrians. Existing AI models are being expanded and supplemented with methods for quantifying uncertainties, increasing explainability, improving robustness – complemented by quality metrics and safe software engineering. Decisions become traceable, risks are reliably addressed, and the effectiveness of safety methods is measurably integrated into AI development.

5 of 7SP6 develops online and offline monitoring methods for observing the AI-based pedestrian detection. Online monitoring ensures immediate safety responses during driving, while offline monitoring uses historical data for targeted improvement and validation. Together, both approaches ensure the short- and long-term safety, robustness, and further development of the AI function in automated driving throughout its entire life cycle.

6 of 7SP7 aims to conduct a comprehensive safety assessment of the AI methods developed throughout their entire life cycle. To this end, an evaluation concept with suitable metrics and triggers is defined in order to quantify uncertainties and systematically compare residual risks and safety objectives. The continuous application of the safety objectives and the analysis of results during development and operation enable targeted further development and provide artifacts for approval-relevant safety arguments.

7 of 7Use cases with increasing complexity

USE CASE 01

A stationary vehicle and a

stationary pedestrian on a straight road.

USE CASE 02

A stationary vehicle and a pedestrian moving at an intersection. The pedestrian may be partially obscured.

USE CASE 03

Several stationary and moving vehicles, bicycles, and several moving pedestrians at and on an intersection, whereby pedestrians may be partially or completely obscured.

Use cases with increasing complexity

USE CASE 01

A stationary vehicle and a stationary pedestrian on a straight road.

USE CASE 02

A stationary vehicle and a pedestrian moving at an intersection. The pedestrian may be partially obscured.

USE CASE 03

Several stationary and moving vehicles, bicycles, and several moving pedestrians at and on an intersection, whereby pedestrians may be partially or completely obscured.