AI engineering as an enabler for a well-founded safety argumentation throughout the entire life cycle of an AI function.

For greater safety in automated driving

The Safe AI Engineering research project is an important step towards a generally accepted and practical safety certification for AI functions that can be used for homologation and is therefore approval-relevant.

The aim is to develop a holistic methodology for validating safety-critical AI functions in automated driving – from planning, development, testing, application, and monitoring to continuous improvement. The research project focuses on increasing safety and better integration of AI features.

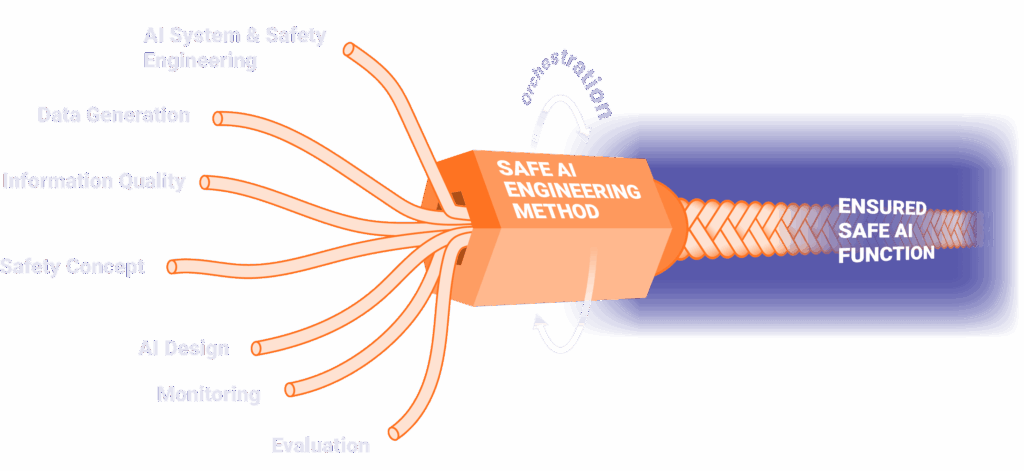

Safety Rope

As with a safety rope, a reliable safety argument is characterized by the consistent interweaving of various aspects. These aspects – represented by the sub-aspects of the project – are woven together into a robust structure through a process known as orchestration, which, similar to a braiding machine, twists the threads into a safe rope.

In this way, Safe AI Engineering creates the basis for an AI engineering method that forms the foundation for a generally accepted and practical proof of safety to establish safety-critical AI-System into the market.

For greater safety in automated driving

The Safe AI Engineering research project is an important step towards a generally accepted and practical safety certification for AI functions that can be used for homologation and is therefore approval-relevant.

The aim is to develop a holistic methodology for validating safety-critical AI functions in automated driving – from planning, development, testing, application, and monitoring to continuous improvement. The research project focuses on increasing safety and better integration of AI features.

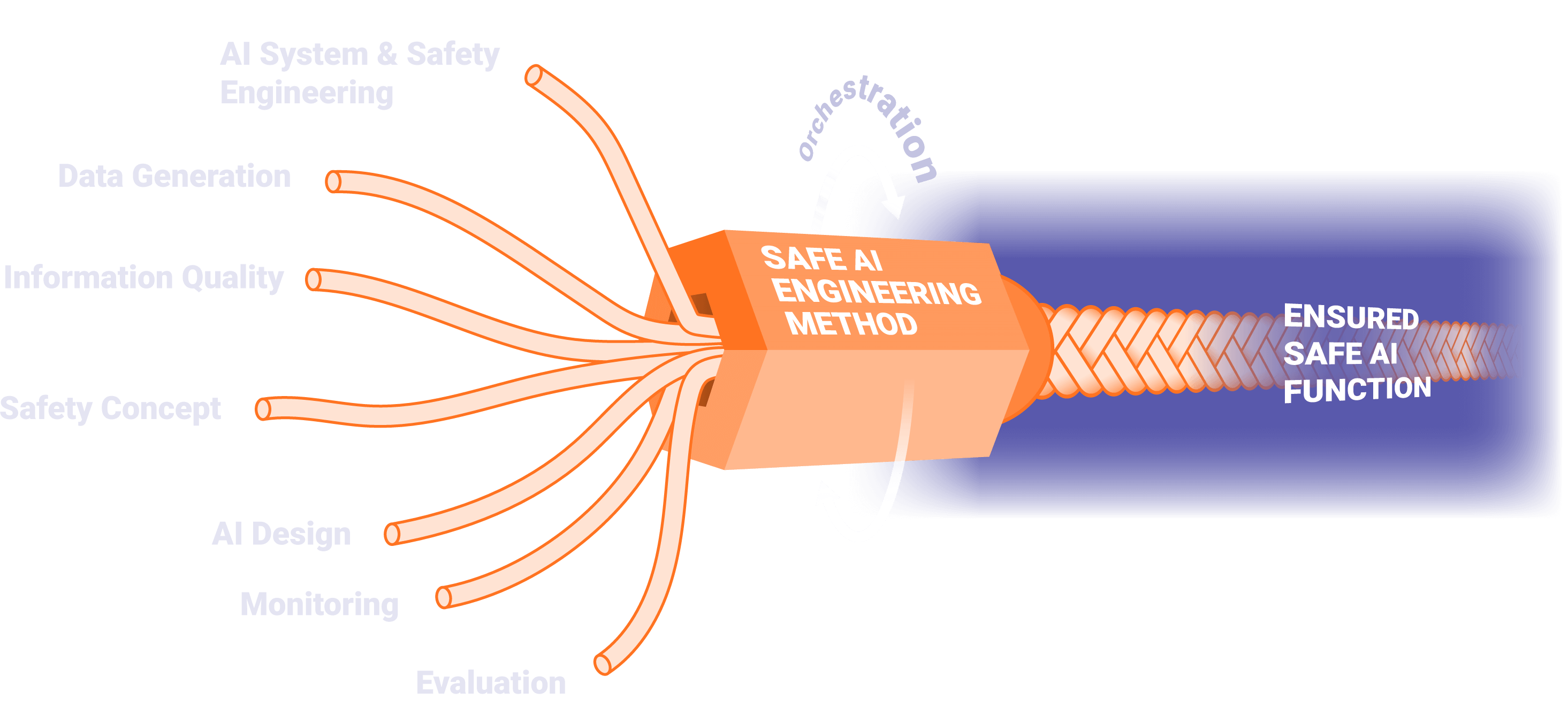

Safety Rope

As with a safety rope, a reliable safety argument is characterized by the consistent interweaving of various aspects. These aspects – represented by the sub-aspects of the project – are woven together into a robust structure through a process known as orchestration, which, similar to a braiding machine, twists the threads into a secure rope.

In this way, Safe AI Engineering creates the basis for an AI engineering method that forms the foundation for a generally accepted, practical verification of safety-critical AI in the market.

Latest news about the project

Facts & Figures

Project Budget

34,5 Mio. €

Consortium Lead

Dr. Ulrich Wurstbauer

Luxoft GmbH

Prof. Dr. Frank Köster

DLR

Consortium

23 Partners

Funding

17,2 Mio. €

Duration

36 Months

March 2025 – February 2028

Consortium